16.1. Comparative effectiveness research

16.1.1. Introduction

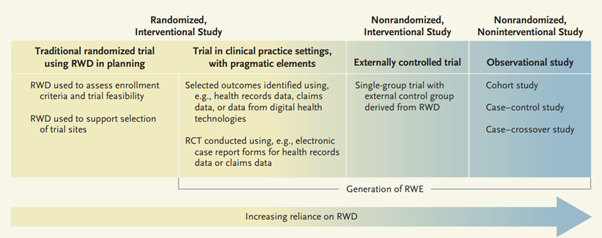

Comparative effectiveness research (CER) is designed to inform healthcare decisions for the prevention, the diagnosis and the treatment of a given health condition. CER therefore compares the potential benefits and harms of therapeutic strategies available in routine practice. The compared interventions may be related to similar treatments, such as competing medicines within the same class or with different mechanism of actions, or to different therapeutic approaches, such as surgical procedures and drug therapies. The comparison may focus only on the relative medical benefits and risks of the different options, or it may weigh both their costs and their benefits. The methods of comparative effectiveness research (Annu Rev Public Health 2012;33:425-45) defines the key elements of CER as a) a head-to-head comparison of active treatments, b) study population typical of the day-to-day clinical practice, and c) evidence focussed on informing healthcare and tailored to the characteristics of individual patients. CER is often discussed in the regulatory context of real-world evidence (RWE) generated by clinical trials or non-interventional (observational) studies using real-world data (RWD) (see Chapter 16.6).

The term ‘Relative effectiveness assessment (REA)’ is also used when comparing multiple technologies or a new technology against standard of care, while ‘rapid’ REA refers to performing an assessment within a limited timeframe in the case of a new marketing authorisation or a new indication granted for an approved medicine (see What is a rapid review? A methodological exploration of rapid reviews in Health Technology Assessments, Int J Evid Based Healthc. 2012;10(4):397-410).

16.1.2. Methods for comparative effectiveness research

CER may use a variety of data sources and methods. Methods to generate evidence for CER are divided below in four categories according to the data source: randomised clinical trials (RCTs), observational data, synthesis of published RCTs and cross-design synthesis.

16.1.2.1. CER based on randomised clinical trials

RCTs are considered the gold standard for demonstrating the efficacy of medicinal products but they rarely measure the benefits, risks or comparative effectiveness of an intervention in post-authorisation clinical practice. Moreover, relatively few RCTs are designed with an alternative therapeutic strategy as a comparator, which limits the utility of the resulting data in establishing recommendations for treatment choices. For these reasons, other methodologies such as pragmatic trials and large simple trials may be used to complement traditional confirmatory RCTs in CER. These trials are discussed in Chapter 4.2.7. The estimand framework described in the ICH E9-R1 Addendum on Estimands and Sensitivity Analysis in Clinical Trials to the Guideline on Statistical Principles for Clinical Trials (2019) should be considered in the planning of comparative effectiveness trials as it provides coherence and transparency on important elements of CER, namely definitions of exposures, endpoints, intercurrent events (ICEs), strategies to manage ICEs, approach to missing data and sensitivity analyses.

In order to facilitate comparison of results of CER between clinical trials, the COMET (Core Outcome Measures in Effectiveness Trials) Initiative aims at developing agreed minimum standardized sets of outcomes (‘core outcome sets’, COS) to be assessed and reported in effectiveness trials of a specific condition. Choosing Important Health Outcomes for Comparative Effectiveness Research: An Updated Review and User Survey (PLoS One 2016;11(1):e0146444) provides an updated review of studies that have addressed the development of COS for measurement and reporting in clinical trials. It is also worth noting that regulatory disease guidelines also establish outcomes of clinical interest to assess if a new therapeutic intervention works. Use of the same endpoint across RCTs thus facilitate comparisons.

16.1.2.2. CER using observational data

Use of observational data in CER

Although observational data from Phase IV trials, post-authorisation safety studies (PASS), or other RWD sources can be used to assess comparative effectiveness (and safety), it is generally inappropriate to use such data as a replacement for randomised evidence, especially in a confirmatory setting. Emulation of Randomized Clinical Trials With Nonrandomized Database Analyses: Results of 32 Clinical Trials (JAMA 2023;329(16):1376-85) concludes that RWE studies can reach similar conclusions as RCTs when design and measurements can be closely emulated, but this may be difficult to achieve. Concordance in results varied depending on the agreement metric. Emulation differences, chance, and residual confounding can contribute to divergence in results and are difficult to disentangle. When and How Can Real World Data Analyses Substitute for Randomized Controlled Trials? (Clin Pharmacol. Ther. 2017;102(6):924-33) suggests that RWE may be preferred over randomised evidence when studying a highly promising treatment for a disease with no other available treatment and where ethical considerations may preclude randomising patients to placebo, particularly if the disease is likely to result in severely compromised quality of life or mortality. In these cases, RWE could support medicines regulation by providing evidence on the safety and effectiveness of the therapy against the typical disease progression observed in the absence of treatment. This comparator disease trajectory may be assessed from historical controls that were diagnosed prior to the availability of the new treatment, or other sources.

When Can We Rely on Real-World Evidence to Evaluate New Medical Treatments? (Clin Pharmacol Ther. 2021; 111(1): 30–4) recommends that decisions regarding use of RWE in the evaluation of new treatments should depend on the specific research question, characteristics of the potential study settings and characteristics of the settings where study results would be applied, and take into account three dimensions in which RWE studies might differ from traditional clinical trials: use of RWD, delivery of real-world treatment and real-world treatment assignment. Observational data have, for instance, been used in proof-of-concept studies on anaplastic lymphoma kinase-positive non-small cell lung cancer, in pivotal trials on acute lymphoblastic leukaemia, thalassemia syndrome and haemophilia A, and in studies aimed at label expansion for epilepsy (see Characteristics of non-randomised studies using comparisons with external controls submitted for regulatory approval in the USA and Europe: a systematic review, BMJ Open. 2019;1;9(2):e024895; The Use of External Controls in FDA Regulatory Decision Making, Ther Innov Regul Sci. 2021;55(5):1019–35; and Application of Real-World Data to External Control Groups in Oncology Clinical Trial Drug Development, Front Oncol. 2022;11:695936).

Outside of specific circumstances, observational data and clinical trials are considered complementary to generate comprehensive evidence. For example, clinical trials may include historical controls from observational studies, or identify eligible study participants from disease registries. In defense of pharmacoepidemiology--embracing the yin and yang of drug research (N Engl J Med 2007;357(22):2219-21) shows that strengths and weaknesses of RCTs and observational studies may make both designs necessary in the study of drug effects. Hybrid approaches for CER allow to enrich clinical trials with observational data, for example:

-

Use of historical data to partially replace concurrent controls in randomised trials (see A roadmap to using historical controls in clinical trials - by Drug Information Association Adaptive Design Scientific Working Group (DIA-ADSWG), Orphanet J Rare Dis. 2020;15:69);

-

Use of historical data as prior evidence for relative treatment effects (see Prior Elicitation for Use in Clinical Trial Design and Analysis: A Literature Review, Int J Environ Res Public Health 2021;18(4):1833);

-

Construction of external control groups in single arm studies and Phase IV trials (see the draft FDA guidance Considerations for the Design and Conduct of Externally Controlled Trials for Drug and Biological Products (2023), A Review of Causal Inference for External Comparator Arm Studies (Drug Saf. 2022;45(8):815-37) and Methods for external control groups for single arm trials or long-term uncontrolled extensions to randomized clinical trials, Pharmacoepidemiol Drug Saf. 2020; 29(11):1382–92).

Methods for CER using observational data

The use of non-randomised data for causal inference is notoriously prone to various sources of bias. For this reason, it is strongly recommended to carefully design or select the source of RWD and to adopt statistical methods that acknowledge and adjust for major risks of bias (e.g. confounding, missing data).

A framework to address these challenges adopts counterfactual theory to treat the observational study as an emulation of a randomised trial. Target trial emulation (described in Chapter 4.2.6.) is a strategy that uses existing tools and methods to formalise the design and analysis of observational studies. It stimulates investigators to identify potential sources of concerns and develop a design that best addresses these concerns and the risk of bias.

Target trial emulation consists in designing first a hypothetical ideal randomised trial (“target trial”) that would answer the research question. A second step identifies how to best emulate the design elements of the target trial (including its eligibility criteria, treatment strategies, assignment procedure, follow-up, outcome, causal contrasts and pre-specified analysis plan) using the available observational data source and the analytic approaches to apply, given the trade-offs in an observational setting. This approach may prevent some common biases, such as immortal time bias or prevalent user bias while also identifying situations where adequate emulation may not be possible using the data at hand. Emulating a Target Trial of Interventions Initiated During Pregnancy with Healthcare Databases: The Example of COVID-19 Vaccination (Epidemiology 2023;34(2):238-46) describes a step-by-step specification of the protocol components of a target trial and their emulation including sensitivity analyses using negative controls to evaluate the presence of confounding and, alternatively to a cohort design, a case-crossover or case-time-control design to eliminate confounding by unmeasured time-fixed factors. Comparative Effectiveness of BNT162b2 and mRNA-1273 Vaccines in U.S. Veterans (N Engl J Med. 2022;386(2):105-15) used target trial emulation to design a study where recipients of each vaccine were matched in a 1:1 ratio according to their baseline risk factors. This design could not be applied where baseline measurements are not collected at treatment start, which may be the case in some patient registries. Use of the estimand framework of the ICH E9 (R1) Addendum to design the target trial may increase transparency on the choices and assumptions needed in the observational study to emulate key trial protocol components, such as the estimand, exposure, intercurrent events (and the strategies to manage them), the missing data and the sensitivity analyses, and therefore may help evaluate the extent to which the observational study addresses the same question as the target trial. Studies on the effect of treatment duration are also often impaired by selection bias: How to estimate the effect of treatment duration on survival outcomes using observational data (BMJ. 2018;360: k182) proposes a 3-step approach (cloning, censoring, weighting) that could be used with target trial simulation to achieve better comparability with the treatment assignment performed in the trial and overcome bias in the observational study.

Statistical inference methods that can be used for conducting causal inference in non-interventional studies are described in Chapter 6.2.3 and include multivariable regression (to adjust for confounding, missing data, measurement error, and other sources of bias), propensity score methods (to adjust for confounding bias), prognostic or disease risk score methods (to adjust for confounding), G-methods and marginal structure models (to adjust for time-dependent confounding), and imputation methods (to adjust for missing data). In some situations, these methods can also be used to adjust for instrumental variables or to estimate prior event rate ratios. Causal Inference in Oncology Comparative Effectiveness Research Using Observational Data: Are Instrumental Variables Underutilized? (J Clin Oncol. 2023;41(13):2319-2322) summarises the key assumption, advantages and disadvantages of methods of causal inference in CER to adjust for confounding, including regression adjustment, propensity scores, difference-in differences, regression discontinuity and instrumental variable, highlighting that different methods can be combined. In some cases, observational studies may substantially benefit from collecting instrumental variables, and this should be considered early on when designing the study. For example, Dealing with missing data using the Heckman selection model: methods primer for epidemiologists (Int J Epidemiol. 2023;52(1):5-13) illustrates the use of instrumental variables to address data that are missing not at random. Another example is discussed in Association of Osteoporosis Medication Use After Hip Fracture With Prevention of Subsequent Nonvertebral Fractures: An Instrumental Variable Analysis (JAMA Netw Open. 2018;1(3):e180826.), where instrumental variables are used to adjust for unobserved confounders.

The Agency for Healthcare Research and Quality (AHRQ)’s Developing a Protocol for Observational Comparative Effectiveness Research: A User’s Guide (2013) identifies minimal standards and best practices for observational CER. It provides principles on a wide range of topics for designing research and developing protocols, with relevant questions to be addressed and checklists of key elements to be considered. The RWE Navigator website discusses methods using observational RWD with a focus on effectiveness research, such as the source of RWD, study designs, approaches to summarising and synthesising the evidence, modelling of effectiveness and methods to adjust for bias and governance aspects. It also presents a glossary of terms and case studies.

A roadmap to using historical controls in clinical trials - by Drug Information Association Adaptive Design Scientific Working Group (DIA-ADSWG) (Orphanet J Rare Dis. 2020;15:69) describes methods to minimise disadvantages of using historical controls in clinical trials, i.e. frequentist methods (e.g. propensity score methods and meta-analytical approach) or Bayesian methods (e.g. power prior method, adaptive designs and the meta-analytic combined [MAC] and meta-analytic predictive [MAP] approaches for meta-analysis). It also provides recommendations on approaches to apply historical controls when they are needed while maximising scientific validity to the extent feasible.

In the context of hybrid studies, key methodological issues to be considered when combining RWD and RCT data include:

-

Differences between the RWD and RCT in terms of data quality and applicability,

-

Differences between available RWD sources (e.g., due to heterogeneity in studied populations, differences in study design, etc.),

-

Risk of bias (particularly for RWD),

-

Generalisability (especially for RCT findings beyond the overall treatment effect).

Methods for systematic reviews and meta-analyses of observational studies are presented in Chapter 10 and Annex 1 of this Guide. They are also addressed in the Cochrane Handbook for Systematic Reviews of Interventions and the Methods Guide for Effectiveness and Comparative Effectiveness Reviews presented in section 16.1.2.3 of this Chapter.

Assessment of observational studies used in CER

Given the potential for bias and confounding in CER based on observational non-randomised studies, the design and results of such studies need to be adequately assessed. The Good ReseArch for Comparative Effectiveness (GRACE) (IQVIA, 2016) provides guidance to enhance the quality of observational CER studies and support their evaluation for decision-making using the provided checklist. How well can we assess the validity of non-randomised studies of medications? A systematic review of assessment tools (BMJ Open 2021;11:e043961) examined whether assessment tools for non-randomised studies address critical elements that influence the validity of findings from non-randomised studies for CER. It concludes that major design-specific sources of bias (e.g., lack of new-user design, lack of active comparator design, time-related bias, depletion of susceptibles, reverse causation) and statistical assessment of internal and external validity are not sufficiently addressed in most of the tools evaluated, although these critical elements should be integrated to systematically investigate the validity of non-randomised studies on comparative safety and effectiveness of medications. The article also provides a glossary of terms, a description of the characteristics the tools and a description of methodological challenges they address.

Comparison of results of observational studies and RCTs

Even if observational studies are not appropriate to replace RCTs for many CER topics and cannot answer exactly the same research question, comparison of their results for a same objective is currently a domain of interest. The underlying assumption is that if observational studies consistently match the results of published trials and predict the results of ongoing trials, this may increase the confidence in the validity of future RWD analyses performed in the absence of randomised trial evidence. In a review of five interventions, Randomized, controlled trials, observational studies, and the hierarchy of research designs (N Engl J Med 2000;342(25):1887-92) found that the results of well-designed observational studies (with either a cohort or case-control design) did not systematically overestimate the magnitude of treatment effects. Interim results from the 10 first emulations reported in Emulating Randomized Clinical Trials With Nonrandomized Real-World Evidence Studies: First Results From the RCT DUPLICATE Initiative (Circulation 2021;143(10):1002-13) found that differences between the RCT and corresponding RWE study populations remained but the RWE emulations achieved a hazard ratio estimate that was within the 95% CI from the corresponding RCT in 8 of 10 studies. Selection of active comparator therapies with similar indications and use patterns enhanced the validity of RWE. Final results of this project are discussed in the presentation Lessons Learned from Trial Replication Analyses: Findings from the DUPLICATE Demonstration Project (Duke-Margolis Center for Health Policy Workshop, 10 May 2022). Emulation Differences vs. Biases When Calibrating Real-World Evidence Findings Against Randomized Controlled Trials (Clin Pharmacol Ther. 2020;107(4):735-7) provides guidance on how to investigate and interpret differences in treatment effect estimates from the two study types.

An important source of selection bias leading to discrepancies between results of observational studies and RCTs may be the use of prevalent drug users in the former. Evaluating medication effects outside of clinical trials: new-user designs (Am J Epidemiol 2003;158(9):915-20) explains the biases introduced by use of prevalent drug users and how a new-user (or incident user) design eliminate these biases by restricting analyses to persons under observation at the start of the current course of treatment. The incident user design in comparative effectiveness research (Pharmacoepidemiol Drug Saf. 2013; 22(1):1–6) reviews published CER case studies in which investigators had used the incident user design and discusses its strengths (reduced bias) and weaknesses (reduced precision of comparative effectiveness estimates). Unless otherwise justified, the incident user design should always be used.

16.1.2.3. CER based on evidence synthesis of published RCTs

The Cochrane Handbook for Systematic Reviews of Interventions (version 6.2, 2022) describes in detail the process of preparing and maintaining systematic reviews on the effects of healthcare interventions. Although its scope is focused on Cochrane reviews, it has a much wider applicability. It includes guidance on the standard methods applicable to every review (planning a review, searching and selecting studies, data collection, risk of bias assessment, statistical analysis, GRADE and interpreting results), as well as more specialised topics. The (GRADE) working group (Grading of Recommendations Assessment, Development, and Evaluation) offers a structured process for rating quality of evidence and grading strength of recommendations in systematic reviews, health technology assessment and clinical practice guidelines. The Methods Guide for Effectiveness and Comparative Effectiveness Reviews (AHRQ, 2018) provides resources supporting comparative effectiveness reviews. They are focused on the US Effective Health Care (EHC) programme and may therefore have limitations as regards their generalisability.

A pairwise meta-analysis of RCT results is used when the primary aim is to estimate the relative effect of two interventions. Network meta-analysis for indirect treatment comparisons (Statist Med. 2002;21:2313–24) introduces methods for assessing the relative effectiveness of two treatments when they have not been compared directly in a randomised trial but have each been compared to other treatments. Overview of evidence synthesis and network meta-analysis – RWE Navigator discussed methods and best practices and gives access to published articles on this topic. A prominent issue that has been overlooked by some systematic literature reviews and network meta-analyses is the fact that RCTs included in a network meta-analysis are usually not comparable with each other even though they all compared to placebo. Different screening and inclusion/exclusion criteria often create different patient groups, and these differences are rarely discussed in indirect comparisons. Before indirect comparison are performed, researchers should therefore check the similarity/differences between the RCTs.

16.1.2.4. CER based on cross-design synthesis

Decision-making should ideally be based on all available evidence, including both randomised and non-randomised studies, and on both individual patient data and published aggregated data. Clinical trials are highly suitable to investigate efficacy but less practical to study long-term outcomes or rare diseases. On the other hand, observational data offer important insights about treatment populations, long-term outcomes (e.g., safety), patient-reported outcomes, prescription patterns, active comparators, etc. Combining evidence from these two sources could therefore be helpful to reach certain effectiveness/safety conclusions earlier or to address more complex questions. Several methods have been proposed but are still experimental. The article Framework for the synthesis of non-randomised studies and randomised controlled trials: a guidance on conducting a systematic review and meta-analysis for healthcare decision making (BMJ Evid Based Med. 2022;27(2):109-19) uses a 7-step mixed methods approach to develop guidance on when and how to best combine evidence from non-randomised studies and RCTs to improve transparency and build confidence in summary effect estimates. It provides recommendations on the most appropriate statistical approaches based on analytical scenarios in healthcare decision making and highlights potential challenges for the implementation of this approach.

16.1.3. Methods for REA

Methodological Guidelines for Rapid REA of Pharmaceuticals (EUnetHTA, 2013) cover a broad spectrum of issues on REA. They address methodological challenges that are encountered by health technology assessors while performing rapid REA and provide and discuss practical recommendations on definitions to be used and how to extract, assess and present relevant information in assessment reports. Specific topics covered include the choice of comparators, strengths and limitations of various data sources and methods, internal and external validity of studies, the selection and assessment of endpoints and the evaluation of relative safety.

16.1.4. Specific aspects

16.1.4.1. Secondary use of data for CER

Electronic healthcare records, patient registries and other data sources are increasingly used in clinical effectiveness studies as they capture real clinical encounters and may document reasons for treatment decisions that are relevant for the general patient population. As they are primarily designed for clinical care and not research, information on relevant covariates and in particular on confounding factors may not be available or adequately measured. These aspects are presented in other chapters of this Guide (see Chapter 6, Methods to address bias and confounding; Chapter 8.2, Secondary use of data, and other chapters for secondary use of data in other contexts) but they need to be specifically considered in the context of CER. For example, the Drug Information Association Adaptive Design Scientific Working Group ( DIA-ADSWG) Roadmap to using historical controls in clinical trials (Orphanet J Rare Dis. 2020;15:69) describes the main sources of RWD to be used as historical controls, with an Appendix providing guidance on factors to be evaluated in the assessment of the relevance of RWD sources and resultant analyses.

16.1.4.2. Data quality

Data quality is essential to ensure the rigor of CER and secondary use of data requires special attention. Comparative Effectiveness Research Using Electronic Health Records Data: Ensure Data Quality (SAGE Research Methods, 2020) discusses challenges and share experiences encountered during the process of transforming electronic health record data into a research quality dataset for CER. This aspect and other quality issues are also discussed in Chapter 13 on Quality management.

In order to address missing information, some CER studies have attempted to integrate information from healthcare databases with information collected ad hoc from study subjects. Enhancing electronic health record measurement of depression severity and suicide ideation: a Distributed Ambulatory Research in Therapeutics Network (DARTNet) study (J Am Board Fam Med. 2012;25(5):582-93) shows the value of linking direct measurements and pharmacy claims data to data from electronic healthcare records. Assessing medication exposures and outcomes in the frail elderly: assessing research challenges in nursing home pharmacotherapy (Med Care 2010;48(6 Suppl):S23-31) describes how merging longitudinal electronic clinical and functional data from nursing home sources with Medicare and Medicaid claims data can support unique study designs in CER but pose many challenging design and analytic issues.

16.1.4.3. Transparency and reproducibility

Clear and transparent study protocols for observational CER should be used to support the evaluation, interpretation and reproducibility of results. Use of the HARPER protocol template (HARmonized Protocol Template to Enhance Reproducibility of hypothesis evaluating real-world evidence studies on treatment effects: A good practices report of a joint ISPE/ISPOR task force, Pharmacoepidemiol Drug Saf. 2023;32(1):44-55) is recommended to facilitate protocol development and addressing important design components. Public registration and posting of the protocol, disease and drug code lists, and statistical programming is strongly recommended to ensure that results from comparative effectiveness studies can be replicated using the same data and/or design, as emphasised in Journal of Comparative Effectiveness Research welcoming the submission of study design protocols to foster transparency and trust in real-world evidence (J Comp Eff Res. 2023;12(1):e220197). The HMA-EMA Catalogue of RWD studies and ClinicalTrials.gov should be used for this purpose.

16.2. Vaccine safety and effectiveness

16.2.1. Vaccine safety

16.2.1.1. General considerations

The book Vaccination Programmes | Epidemiology, Monitoring, Evaluation (Hahné, S., Bollaerts, K., & Farrington, P., Routledge, 2021) is a comprehensive textbook addressing most of the concepts presented in this Chapter. For contents related to safety monitoring of vaccines, it further builds on the 2014 ADVANCE Report on appraisal of vaccine safety methods that described a wide range of direct and indirect methods for vaccine safety assessment. Specific aspects related to vaccine safety and effectiveness are discussed in several documents:

-

The Report of the CIOMS/WHO Working Group on Definition and Application of Terms for Vaccine Pharmacovigilance (2012) provides definitions and explanatory notes for the terms ‘vaccine pharmacovigilance’, ‘vaccination failure’ and ‘adverse event following immunisation (AEFI)’.

-

The Guide to active vaccine safety surveillance: Report of CIOMS working group on vaccine safety – executive summary (Vaccine 2017;35(32):3917-21) describes the process for selecting the best approach to active surveillance considering key implementation issues, including in resource-limited countries.

-

The CIOMS Guide to Vaccine Safety Communication (2018) addresses vaccine safety communication aspects for regulators, vaccination policy-makers, and other stakeholders, when introducing vaccines in populations, based on selected examples.

-

The Brighton Collaboration provide a resource to facilitate and harmonise collection, analysis, and presentation of vaccine safety data, including case definitions for outcomes of interest, including adverse events of special interest (AESIs).

-

Module 4 (Surveillance) of the e-learning training course Vaccine Safety Basics of the World Health Organization (WHO) describes pharmacovigilance principles, causality assessment procedures, surveillance systems, and places safety in the context of the vaccine benefit/risk profile.

-

Recommendations on vaccine-specific aspects of the EU Pharmacovigilance System, including on risk management, signal detection and post-authorisation safety studies (PASS) are presented in Module P.I: Vaccines for prophylaxis against infectious diseases (EMA, 2013) of the Good Pharmacovigilance Practices (GVP).

-

The WHO Covid-19 vaccines: safety surveillance manual (WHO, 2020) was developed upon recommendation of the WHO Global Advisory Committee on Vaccine Safety (GACVS) and describes categories of surveillance strategies: passive, active, cohort event monitoring, and sentinel surveillance. While developed for COVID-19 vaccines, it can be used to guide pandemic preparedness activities for the monitoring of novel vaccines.

-

A vaccine study design selection framework for the postlicensure rapid immunization safety monitoring program (Am J Epidemiol. 2015;181(8):608-18) describes in a tabular form strengths and weaknesses of study designs and can be broadly applied to vaccine research questions beyond safety assessment.

16.2.1.2. Signal detection and validation

Besides a qualitative analysis of spontaneous case reports or case series, quantitative methods such as disproportionality analyses (described in Chapter 11) and observed-to-expected (O/E) analyses are routinely employed in signal detection and validation for vaccines. Several documents discuss the merits and review the methods of these approaches for vaccines.

Disproportionality analyses

GVP Module P.I: Vaccines for prophylaxis against infectious diseases describes aspects to be considered when applying methods for vaccine disproportionality analyses, including choice of the comparator group and use of stratification. Effects of stratification on data mining in the US Vaccine Adverse Event Reporting System (VAERS) (Drug Saf. 2008;31(8):667-74) demonstrates that stratification can reveal and reduce confounding and unmask some vaccine-event pairs not found by crude analyses. However, Stratification for Spontaneous Report Databases (Drug Saf. 2008;31(11):1049-52) highlights that extensive use of stratification in signal detection algorithms should be avoided, as it can mask true signals. Vaccine-Based Subgroup Analysis in VigiBase: Effect on Sensitivity in Paediatric Signal Detection (Drug Saf. 2012;35(4):335-46) further examines the effects of subgroup analyses based on the relative distribution of vaccine/non-vaccine reports in paediatric adverse drug reaction data (ADR) data. In Performance of Stratified and Subgrouped Disproportionality Analyses in Spontaneous Databases (Drug Saf. 2016;39(4):355-64), subgrouping by vaccines/non-vaccines resulted in a decrease in both precision and sensitivity in all spontaneous report databases that contributed data.

Optimization of a quantitative signal detection algorithm for spontaneous reports of adverse events post immunization (Pharmacoepidemiol Drug Saf. 2013;22(5): 477–87) explores various ways of improving performance of signal detection algorithms for vaccines.

Adverse events associated with pandemic influenza vaccines: comparison of the results of a follow-up study with those coming from spontaneous reporting (Vaccine 2011;29(3):519-22) reported a more complete pattern of reactions when using two complementary methods for first characterisation of the post-marketing safety profile of a new vaccine, which may impact on signal detection.

In Review of the initial post-marketing safety surveillance for the recombinant zoster vaccine (Vaccine 2020;38(18):3489-500), the time-to-onset distribution of zoster vaccine-adverse event pairs was used to generate a quantitative signal of unexpected temporal relationship.

Bayesian disproportionality methods have also been developed to generate disproportionality signals. In Association of Facial Paralysis With mRNA COVID-19 Vaccines: A Disproportionality Analysis Using the World Health Organization Pharmacovigilance Database (JAMA Intern Med. 2021;e212219), a potential safety signal for facial paralysis was explored using the Bayesian neural network method.

In Disproportionality analysis of anaphylactic reactions after vaccination with messenger RNA coronavirus disease 2019 vaccines in the United States (Ann Allergy Asthma Immunol. 2021; S1081-1206(21)00267-2) the CDC Wide-ranging Online Data for Epidemiologic Research (CDC WONDER) system was used in conjunction with proportional reporting ratios to evaluate whether rates of anaphylaxis cases reported in the VAERS database following administration of mRNA COVID-19 vaccines is disproportionately different from all other vaccines.

Signaling COVID-19 Vaccine Adverse Events (Drug Saf. 2022 Jun 23:1–16) discusses the extent, direction, impact, and causes of masking, an issue associated with signal detection methodologies, in which signals for a product of interest are hidden by the presence of other reported products, which may limit the understanding of the risks associated with COVID-19 and other vaccines, and delay their identification.

Observed-to-expected analyses and background incidence rates

In vaccine vigilance, O/E analyses compare the ‘observed’ number of cases of an adverse event occurring in vaccinated individuals and recorded in a data collection system (e.g. a spontaneous reporting system or an electronic healthcare database) and the ‘expected’ number of cases that would have naturally occurred in the same population without vaccination, estimated from available incidence rates in a non-vaccinated population. O/E analyses constitute a first step in the continuum of safety signal evaluation, and can guide further steps such as a formal pharmacoepidemiological study. GVP Module P.I: Vaccines for prophylaxis against infectious diseases (EMA, 2013) suggests conducting O/E analyses for signal validation and preliminary signal evaluation when prompt decision-making is required, and there is insufficient time to review a large number of individual cases. It discusses key requirements of O/E analyses: an observed number of cases detected in a passive or active surveillance system, near real-time exposure data, appropriately stratified background incidence rates calculated on a population similar to the vaccinated population (for the expected number of cases), the definition of appropriate risk periods (where there is suspicion and/or biological plausibility that there is a vaccine‐associated increased risk of the event) and sensitivity analyses around these measures. O/E analyses may require some adjustments for continuous monitoring due to inflation of type 1 error rates when multiple tests are performed. The method is further discussed in Pharmacoepidemiological considerations in observed‐to‐expected analyses for vaccines (Pharmacoepidemiol Drug Saf. 2016;25(2):215-22) and the review Near real‐time vaccine safety surveillance using electronic health records - a systematic review of the application of statistical methods (Pharmacoepidemiol Drug Saf. 2016;25(3):225-37).

O/E analyses require several pre-defined assumptions based on the requirements listed above. Each of these assumptions can be associated with uncertainties. How to manage these uncertainties is also addressed in Pharmacoepidemiological considerations in observed-to-expected analyses for vaccines (Pharmacoepidemiol Drug Saf. 2016;25(2):215–22). Observed-over-Expected analysis as additional method for pharmacovigilance signal detection in large-scaled spontaneous adverse event reporting (Pharmacoepidemiol Drug Saf. 2023;32(7):783-794) uses two examples of events of interest (idiopathic peripheral facial paralysis and Bell's palsy) in the context of the COVID-19 immunisation campaigns, when very large numbers of case safety reports (ICSRs) had to be timely handled.

Use of population based background rates of disease to assess vaccine safety in childhood and mass immunisation in Denmark: nationwide population based cohort study (BMJ. 2012;345:e5823) illustrates the importance of collecting background rates by estimating risks of coincident associations of emergency consultations, hospitalisations and outpatients consultations, with vaccination. Rates of selected disease events for several countries may vary by age, sex, method of ascertainment, and geography, as shown in Incidence Rates of Autoimmune Diseases in European Healthcare Databases: A Contribution of the ADVANCE Project (Drug Saf. 2021;44(3):383-95), where age-, gender-, and calendar-year stratified incidence rates of nine autoimmune diseases in seven European healthcare databases from four countries were generated to support O/E analyses. Guillain-Barré syndrome and influenza vaccines: A meta-analysis (Vaccine 2015; 33(31):3773-8) suggests that a trend observed between different geographical areas would be consistent with a different susceptibility of developing a particular adverse reaction among different populations. In addition, comparisons with background rates may be invalid if conditions are unmasked at vaccination visits (see Human papillomavirus vaccination of adult women and risk of autoimmune and neurological diseases, J Intern Med. 2018;283:154-65)).

Several studies have generated background incidence rates of AESIs for COVID-19 vaccines and discuss methodological challenges related to identifying AESIs in electronic health records (EHRs) (see The critical role of background rates of possible adverse events in the assessment of COVID-19 vaccine safety, Vaccine 2021;39(19):2712-18).

In Arterial events, venous thromboembolism, thrombocytopenia, and bleeding after vaccination with Oxford-AstraZeneca ChAdOx1-S in Denmark and Norway: population based cohort study (BMJ. 2021;373:n1114), observed age- and sex-specific rates of events among vaccinated people were compared with expected rates in the general population calculated from the same databases, thereby removing a source of variability between observed and expected rates. Where this is not possible, rates from multiple data sources have shown to be heterogeneous, and the choice of relevant data should take into account differences in database and population characteristics related to different diagnoses, recording and coding practices, source populations (e.g., inclusion of subjects from general practitioners and/or hospitals), healthcare systems, and linkage ability (e.g., to hospital records). This is further discussed in Characterising the background incidence rates of adverse events of special interest for covid-19 vaccines in eight countries: multinational network cohort study (BMJ. 2021;373:n1435) and Background rates of five thrombosis with thrombocytopenia syndromes of special interest for COVID-19 vaccine safety surveillance: Incidence between 2017 and 2019 and patient profiles from 38.6 million people in six European countries (Pharmacoepidemiol Drug Saf. 2022;31(5):495-510).

Contextualising adverse events of special interest to characterise the baseline incidence rates in 24 million patients with COVID-19 across 26 databases: a multinational retrospective cohort study (EClinicalMedicine. 2023;58:101932) used data from primary care, electronic health records, and insurance claims mapped to a common data model to characterise the incidence rates of AESIs, also following SARS-CoV-2 infection (considered a confounder), compared them to historical rates in the general population, and addressed issues of heterogeneity.

Historical comparator designs may generate false positives, as discussed in Bias, Precision and Timeliness of Historical (Background) Rate Comparison Methods for Vaccine Safety Monitoring: An Empirical Multi-Database Analysis (Front Pharmacol. 2021;12:773875), which explores the effect of empirical calibration on type 1 and 2 errors using outcomes presumed to be unrelated to vaccines (negative control outcomes) as well as positive controls (outcomes simulated to be caused by the vaccines).

Factors Influencing Background Incidence Rate Calculation: Systematic Empirical Evaluation Across an International Network of Observational Databases (Front Pharmacol. 2022;13:814198) examined the sensitivity of rates to the choice of design parameters using 12 data sources to systematically examine their influence on incidence rates using 15 AESIs for COVID-19 vaccines. Rates were highly influenced by the choice of the database (varying by up to a factor of 100), the choice of anchoring (e.g., health visit, vaccination, or arbitrary date) for the time-at-risk start, the choice of clean window and time-at-risk duration, but less so by secular or seasonal trends. It concluded that results should be interpreted in the context of study parameter choices.

Sequential methods

Sequential methods, as described in Early detection of adverse drug events within population-based health networks: application of sequential methods (Pharmacoepidemiol Drug Saf. 2007;16(12):1275-84), allow O/E analyses to be performed on a routine (e.g., weekly) basis using cumulative data with adjustment for multiplicity. Such methods are routinely used for near-real time surveillance in the Vaccine Safety Datalink (VSD) (see Near real-time surveillance for influenza vaccine safety: proof-of-concept in the Vaccine Safety Datalink Project, Am J Epidemiol 2010;171(2):177-88). Potential issues are described in Challenges in the design and analysis of sequentially monitored postmarket safety surveillance evaluations using electronic observational health care data (Pharmacoepidemiol Drug Saf. 2012;21(S1):62-71). A review of signals detected over 3 years in the VSD concluded that care with data quality, outcome definitions, comparator groups, and duration of surveillance, is required to enable detection of true safety issues while controlling for error (Active surveillance for adverse events: the experience of the Vaccine Safety Datalink Project, Pediatrics 2011;127(S1):S54-S64).

A new self-controlled case series method for analyzing spontaneous reports of adverse events after vaccination (Am J Epidemiol. 2013;178(9):1496-504) extends the self-controlled case series approach (see Chapter 4.2.3, and 16.2.2.2 in this Chapter) to explore and quantify vaccine safety signals from spontaneous reports using different assumptions (e.g., considering large amount of underreporting, and variation of reporting with time since vaccination). The method should be seen as a signal strengthening approach for quickly exploring a signal prior to a pharmacoepidemiological study (see for example, Kawasaki disease and 13-valent pneumococcal conjugate vaccination among young children: A self-controlled risk interval and cohort study with null results, PLoS Med. 2019;16(7):e100284).

The tree-based scan statistic (TreeScan) is a statistical data mining method that can be used for the detection of vaccine safety signals from large health insurance claims and electronic health records (Drug safety data mining with a tree-based scan statistic, Pharmacoepidemiol Drug Saf. 2013;22(5):517-23). A Broad Safety Assessment of the 9-Valent Human Papillomavirus Vaccine (Am J Epidemiol. 2021;kwab022) and A broad assessment of covid-19 vaccine safety using tree-based data-mining in the vaccine safety datalink (Vaccine. 2023;41(3):826-835) used the self-controlled tree-temporal scan statistic which does not require pre-specified outcomes or specific post-exposure risk periods. The method requires further evaluation of its utility for routine vaccine surveillance in terms of requirements for large databases and computer resources, as well as predictive value of the signals detected.

16.2.1.3. Study designs for vaccine safety assessment

A complete review of vaccine safety study designs and methods for hypothesis-testing studies is included in the ADVANCE Report on appraisal of vaccine safety methods (2014) and in Part IV of the book Vaccination Programmes | Epidemiology, Monitoring, Evaluation (Hahné, S., Bollaerts, K., & Farrington, P., Routledge, 2021).

Current Approaches to Vaccine Safety Using Observational Data: A Rationale for the EUMAEUS (Evaluating Use of Methods for Adverse Events Under Surveillance-for Vaccines) Study Design (Front Pharmacol. 2022;13:837632) provides an overview of strengths and limitations of study designs for vaccine safety monitoring and discusses the assumptions made to mitigate bias in such studies.

Methodological frontiers in vaccine safety: qualifying available evidence for rare events, use of distributed data networks to monitor vaccine safety issues, and monitoring the safety of pregnancy interventions (BMJ Glob Health. 2021;6(Suppl 2):e003540) addresses multiple aspects of pharmacoepidemiological vaccine safety studies, including study designs.

Cohort and case-control studies

There is a large body of published literature reporting on the use of the cohort design (and to a lesser extent, the case-control design) for the assessment of vaccine safety. Aspects of these designs presented in Chapters 4.2.1 and 4.2.2 are applicable to vaccine studies (for the cohort design, see also the examples of studies on background incidence rates in paragraph 16.2.2.1 of this Chapter). A recent illustration of the cohort design is provided in Clinical outcomes of myocarditis after SARS-CoV-2 mRNA vaccination in four Nordic countries: population based cohort study (BMJ Med. 2023 Feb 1;2(1):e000373) which used nationwide register data to compare clinical outcomes of myocarditis associated with vaccination, with COVID-19 disease, and with conventional myocarditis, with respect to readmission to hospital, heart failure, and death, using the Kaplan-Meier estimator approach.

Cohort-event monitoring

Prospective cohort-event monitoring (CEM) including active surveillance of vaccinated subjects using smartphone applications and/or web-based tools has been extensively used to monitor the safety of COVID-19 vaccines, as primary data collection was the only means to rapidly identify safety concerns when the vaccines started to be used at large scale. A definition of cohort-event monitoring is provided in The safety of medicines in public health programmes : pharmacovigilance, an essential tool (WHO, 2006, Chapter 6.5, Cohort event monitoring, pp 40-41). Specialist Cohort Event Monitoring studies: a new study method for risk management in pharmacovigilance (Drug Saf. 2015;38(2):153-63) discusses the rationale and features to address possible bias, and some applications of this design. COVID-19 vaccine waning and effectiveness and side-effects of boosters: a prospective community study from the ZOE COVID Study (Lancet Infect Dis. 2022:S1473-3099(22)00146-3) is a longitudinal, prospective, community-based study to assess self-reported systemic and localised adverse reactions of COVID-19 booster doses, in addition to effectiveness against infection (a confounder). Self-reported data may introduce information bias, as some participants might be more likely to report symptoms and some may drop out; however, multi-country CEM studies allow to include large populations, as shown in Cohort Event Monitoring of Adverse Reactions to COVID-19 Vaccines in Seven European Countries: Pooled Results on First Dose (Drug Saf. 2023;46(4):391-404).

Case-only designs

Traditional designs such as the cohort and case-control designs (see Chapters 4.2.1 and 4.2.2) may be difficult to implement in circumstances of high vaccine coverage (for example, in mass immunisation campaigns such as for COVID-19), a lack of an appropriate comparator group (e.g., unvaccinated), or a lack of adequate covariate information at the individual level. Frequent sources of confounding are underlying health status and factors influencing the likelihood of being vaccinated, such as access to healthcare or belonging to a high-risk group (see paragraph 16.2.4.1 on Studies in special populations in this Chapter). In such situations, case-only designs may provide stronger evidence than large cohort studies as they control for fixed individual-level confounders (such as demographics, genetics, or social deprivation) and have similar, sometimes higher, power (see Control without separate controls: evaluation of vaccine safety using case-only methods, Vaccine 2004;22(15-16):2064-70). Case-only designs are discussed in Chapter 4.2.3.

Several publications have compared traditional and case-only study designs for vaccine studies:

-

Epidemiological designs for vaccine safety assessment: methods and pitfalls (Biologicals 2012;40(5):389-92) used three designs (cohort, case-control, and self-controlled case-series (SCCS)) to illustrate aspects such as case definition, limitations of data sources, uncontrolled confounding, and interpretation of findings.

-

Comparison of epidemiologic methods for active surveillance of vaccine safety (Vaccine 2008; 26(26):3341-45) performed simulations to compare four designs (matched cohort, vaccinated-only (risk interval) cohort, case-control, and SCCS). The cohort design allowed for the most rapid signal detection, less false-positive error and highest statistical power in sequential analyses. However, one limitation of this simulation was the lack of case validation.

-

The simulation study Four different study designs to evaluate vaccine safety were equally validated with contrasting limitations (J Clin Epidemiol. 2006; 59(8):808-18) compared four designs (cohort, case-control, risk-interval and SCCS) and concluded that all were valid, however, with contrasting strengths and weaknesses. The SCCS, in particular, proved to be an efficient and valid alternative to the cohort design.

-

Hepatitis B vaccination and first central nervous system demyelinating events: Reanalysis of a case-control study using the self-controlled case series method (Vaccine 2007;25(31):5938-43) describes how the SCCS found similar results as the case-control design but with greater precision, based on the assumption that exposures are independent of earlier events, and recommended that case-series analyses should be conducted in parallel to case-control analyses.

It is increasingly considered good practice to use combined approaches, such as a cohort design and sensitivity analyses using a self-controlled method, as this provides an opportunity for minimising some biases that cannot be taken into account in the primary design (see for example, Myocarditis and pericarditis associated with SARS-CoV-2 vaccines: A population-based descriptive cohort and a nested self-controlled risk interval study using electronic health care data from four European countries; Front Pharmacol. 2022;13:1038043).

While the SCCS is suited to secondary use of data, it may not always be appropriate in situations where rapid evidence generation is needed, since follow-up time needs to be accrued. In such instances, design approaches include the SCRI method that can be used to shorten observation time (see The risk of Guillain-Barre Syndrome associated with influenza A (H1N1) 2009 monovalent vaccine and 2009-2010 seasonal influenza vaccines: Results from self-controlled analyses, Pharmacoepidemiol. Drug Saf 2012;21(5):546-52; and Chapter 4.2.3); O/E analyses using historical background rates (see Near real-time surveillance for influenza vaccine safety: proof-of-concept in the Vaccine Safety Datalink Project, Am J Epidemiol 2010;171(2):177-88); or traditional case-control studies (see Guillain-Barré syndrome and adjuvanted pandemic influenza A (H1N1) 2009 vaccine: multinational case-control study in Europe, BMJ 2011;343:d3908).

Nevertheless, the SCCS design is an adequate method to study vaccine safety, provided the main requirements of the method are taken into account (see Chapter 4.2.3). An illustrative example is shown in Bell's palsy and influenza(H1N1)pdm09 containing vaccines: A self-controlled case series (PLoS One. 2017;12(5):e0175539). In First dose ChAdOx1 and BNT162b2 COVID-19 vaccinations and cerebral venous sinus thrombosis: A pooled self-controlled case series study of 11.6 million individuals in England, Scotland, and Wales (PLoS Med. 2022;19(2):e1003927), pooled primary care, secondary care, mortality, and virological data were used. The authors discuss the possibility that the SCCS assumption of event-independent exposure may not have been satisfied in the case of cerebral venous sinus thrombosis (CVST) since vaccination prioritised risk groups, which may have caused a selection effect where individuals more likely to have an event were less likely to be vaccinated and thus less likely to be included in the analyses. In First-dose ChAdOx1 and BNT162b2 COVID-19 vaccines and thrombocytopenic, thromboembolic and hemorrhagic events in Scotland (Nat Med. 2021; 27(7):1290-7), potential residual confounding by indication in the primary analysis (a nested case-control design) was addressed by a SCCS to adjust for time-invariant confounders. Risk of acute myocardial infarction and ischaemic stroke following COVID-19 in Sweden: a self-controlled case series and matched cohort study (Lancet 2021;398(10300):599-607) showed that a COVID-19 diagnosis is an independent risk factor for the events, using two complementary designs in Swedish healthcare data: a SCCS to calculate incidence rate ratios in temporal risk periods following COVID-19 onset, and a matched cohort study to compare risks within 2 weeks following COVID-19 to the risk in the background population.

A modified self-controlled case series method for event-dependent exposures and high event-related mortality, with application to COVID-19 vaccine safety (Stat Med. 2022;41(10):1735-50) used data from a study of the risk of cardiovascular events, together with simulated data, to illustrate how to handle event-dependent exposures and high event-related mortality, and proposes a newly developed test to determine whether a vaccine has the same effect (or lack of effect) at different doses.

Estimating the attributable risk

The attributable risk of a given safety outcome (assuming a causal effect attributable to vaccination) is an important estimate to support public health decision-making in the context of vaccination campaigns. In the population-based cohort study Investigation of an association between onset of narcolepsy and vaccination with pandemic influenza vaccine, Ireland April 2009-December 2010 (Euro Surveill. 2014;19(17):15-25), the relative risk was calculated as the ratio of the incidence rates for vaccinated and unvaccinated subjects, while the absolute attributable risk was calculated as the difference in incidence rates. Safety of COVID-19 vaccination and acute neurological events: A self-controlled case series in England using the OpenSAFELY platform (Vaccine. 2022;40(32):4479-4487) used primary care, hospital admission, emergency care, mortality, vaccination, and infection surveillance data linked through a dedicated data analytics platform, and calculated the absolute risk of selected AESIs.

Case-coverage design

The case-coverage design is a type of ecological design using exposure information on cases, and population data on vaccination coverage to serve as control. It compares odds of exposure in cases to odds of exposure in the general population, similar to the screening method used in vaccine effectiveness studies (see below paragraph16.2.3.3 in this Chapter). However, it does not control for residual confounding and is prone to selection bias introduced by propensity to seek care (and vaccination) and by awareness of possible occurrence of a specific outcome, and does not consider underlying medical conditions, with limited comparability between cases and controls. In addition, it requires reliable and granular vaccine coverage data corresponding to the population from which cases are drawn, to allow control of confounding by stratified analyses (see for example, Risk of narcolepsy in children and young people receiving AS03 adjuvanted pandemic A/H1N1 2009 influenza vaccine: retrospective analysis, BMJ. 2013; 346:f794).

16.2.2. Vaccine effectiveness

16.2.2.1. General considerations

The book Vaccination Programmes | Epidemiology, Monitoring, Evaluation (Hahné, S., Bollaerts, K., & Farrington, P., Routledge, 2021) discusses the concept of vaccine effectiveness and provides further insight into the methods discussed in this section. The book Design and Analysis of Vaccine Studies (ME Halloran, IM Longini Jr., CJ Struchiner, Ed., Springer, 2010) presents methods and a conceptual framework of the different effects of vaccination at the individual and population level, and includes methods for evaluating indirect, total and overall effects of vaccination in populations.

A key reference is Vaccine effects and impact of vaccination programmes in post-licensure studies (Vaccine 2013;31(48):5634-42), which reviews methods for the evaluation of the effectiveness of vaccines and vaccination programmes and discusses design assumptions and biases to consider. A framework for research on vaccine effectiveness (Vaccine 2018;36(48):7286-93) proposes standardised definitions, considers models of vaccine failure, and provides methodological considerations for different designs.

Evaluation of influenza vaccine effectiveness: a guide to the design and interpretation of observational studies (WHO, 2017) provides an overview of methods to study influenza vaccine effectiveness, also relevant for other vaccines. Evaluation of COVID-19 vaccine effectiveness (WHO, 2021) provides guidance on how to monitor COVID-19 vaccine effectiveness using observational study designs, including considerations relevant to low- and middle-income countries. Methods for measuring vaccine effectiveness and a discussion of strengths and limitations are presented in Exploring the Feasibility of Conducting Vaccine Effectiveness Studies in Sentinel’s PRISM Program (CBER, 2018). Although focusing on the planning, evaluation, and modelling of vaccine efficacy trials, Challenges of evaluating and modelling vaccination in emerging infectious diseases (Epidemics 2021:100506) includes a useful summary of references for the estimation of indirect, total, and overall effects of vaccines.

16.2.2.2. Sources of exposure and outcome data

Data sources for vaccine studies largely rely on vaccine-preventable infectious disease surveillance (for effectiveness studies) and vaccine registries or vaccination data recorded in healthcare databases (for both safety and effectiveness studies). Considerations on validation of exposure and outcome data are provided in Chapter 5.

Infectious disease surveillance is a population-based, routine public health activity involving systematic data collection to monitor epidemiological trends over time in a defined catchment population, and can use various indicators. Data can be obtained from reference laboratories, outbreak reports, hospital records or sentinel systems, and use consistent case definitions and reporting methods. There is usually no known population denominator, thus surveillance data cannot be used to measure disease incidence. Limitations include under-detection/under-reporting (if passive surveillance) or over-reporting (e.g., due to improvements in case detection or introduction of new vaccines with increased disease awareness). Changes/delays in case counting or reporting can artificially reduce the number of reported cases, thus artificially increasing vaccine effectiveness. Infectious Disease Surveillance (International Encyclopedia of Public Health 2017;222-9) is a comprehensive review including definitions, methods, and considerations on use of surveillance data in vaccine studies. The chapter on Routine Surveillance of Infectious Diseases in Modern Infectious Disease Epidemiology (J. Giesecke. 3rd Ed. CRC Press, 2017) discusses how surveillance data are collected and interpreted, and identifies sources of potential bias. Chapter 8 of Vaccination Programmes | Epidemiology, Monitoring, Evaluation outlines the main methods of vaccine-preventable disease surveillance, considering data sources, case definitions, biases and methods for descriptive analyses.

Granular epidemiological surveillance data (e.g., by age, gender, pathogen strain) are of particular importance for vaccine effectiveness studies. Such data were available from the European Centre for Disease Prevention and Control and the WHO Coronavirus (COVID-19) Dashboard during the COVID-19 pandemic and, importantly, also included vaccine coverage data.

EHRs and claims-based databases constitute an alternative to epidemiological surveillance data held by national public health bodies, as illustrated in Using EHR data to identify coronavirus infections in hospitalized patients: Impact of case definitions on disease surveillance (Int J Med Inform. 2022;166:104842), which also recommends using sensitivity analyses to assess the impact of variations in case definitions.

Examples of vaccination registries, and challenges in developing such registries, are discussed in Vaccine registers-experiences from Europe and elsewhere (Euro Surveill. 2012;17(17):20159), Validation of the new Swedish vaccination register - Accuracy and completeness of register data (Vaccine 2020; 38(25):4104-10), and Establishing and maintaining the National Vaccination Register in Finland (Euro Surveill. 2017;22(17):30520). Developed by WHO, Public health surveillance for COVID-19: interim guidance describes key aspects of the implementation of SARS-CoV-2 surveillance, including a section on vaccine effectiveness monitoring in relation to surveillance systems.

16.2.2.3. Study designs for vaccine effectiveness assessment

Traditional cohort and case-control designs

The case-control design has been used to evaluate vaccine effectiveness, but the likelihood of bias and confounding is a potential important limitation. The articles Case-control vaccine effectiveness studies: Preparation, design, and enrollment of cases and controls (Vaccine 2017; 35(25):3295-302) and Case-control vaccine effectiveness studies: Data collection, analysis and reporting results (Vaccine 2017; 35(25):3303-8) provide recommendations on best practices for their design, analysis and reporting. Based on a meta-analysis of 49 cohort studies and 10 case-control studies, Efficacy and effectiveness of influenza vaccines in elderly people: a systematic review (Lancet 2005;366(9492):1165-74) highlights the heterogeneity of outcomes and study populations included in such studies and the high likelihood of selection bias. In A Dynamic Model for Evaluation of the Bias of Influenza Vaccine Effectiveness Estimates From Observational Studies (Am J Epidemiol. 2019;188(2):451-60), a dynamic probability model was developed to evaluate biases in passive surveillance cohort, test-negative, and traditional case-control studies.

Non-specific effects of vaccines, such as a decrease of mortality, have been claimed in observational studies but can be affected by bias and confounding. Epidemiological studies of the 'non-specific effects' of vaccines: I--data collection in observational studies (Trop Med Int Health 2009;14(9):969-76.) and Epidemiological studies of the non-specific effects of vaccines: II--methodological issues in the design and analysis of cohort studies (Trop Med Int Health 2009;14(9):977-85) provide recommendations for observational studies conducted in high mortality settings; however, these recommendations have wider relevance.

The cohort design has been widely used to monitor the effectiveness of COVID-19 vaccines; the following two examples reflect early times of the pandemic, and its later phase when several vaccines were used, reaching wider population groups and used according to different types of vaccination schedule depending on national policies: BNT162b2 mRNA Covid-19 Vaccine in a Nationwide Mass Vaccination Setting (N Engl J Med. 2021;384(15):1412-23) used data from a nationwide healthcare organisation to match vaccinated and unvaccinated subjects according to demographic and clinical characteristics, to assess effectiveness against infection, COVID-19 related hospitalisation, severe illness, and death. Vaccine effectiveness against SARS-CoV-2 infection, hospitalization, and death when combining a first dose ChAdOx1 vaccine with a subsequent mRNA vaccine in Denmark: A nationwide population-based cohort study (PLoS Med. 2021;18(12):e1003874) used nationwide linked registries to estimate VE against several outcomes of interest of a heterologous vaccination schedule, compared to unvaccinated individuals. As vaccination coverage increased, using a non-vaccinated comparator group became no longer feasible or suitable, and alternative comparators were needed (see paragraph below on comparative effectiveness).

More recently, pharmacoepidemiological studies have assessed the effectiveness of COVID-19 booster vaccination, which uncovered new methodological challenges, such as the need to account for time-varying confounding. Challenges in Estimating the Effectiveness of COVID-19 Vaccination Using Observational Data (Ann Intern Med. 2023;176(5):685-693) describes two approaches to target trial emulation to overcome limitations due to confounding or designs not considering the evolution of the pandemic over time and the rapid uptake of vaccination. Comparative effectiveness of different primary vaccination courses on mRNA-based booster vaccines against SARs-COV-2 infections: a time-varying cohort analysis using trial emulation in the Virus Watch community cohort (Int J Epidemiol. 2023 Apr 19;52(2):342-354) conducted trial emulation by meta-analysing eight cohort results to reduce time-varying confounding-by-indication.

Test-negative case-control design

The test-negative case-control design aims to reduce bias associated with misclassification of infection and confounding by healthcare-seeking behaviour, at the cost of sometimes difficult-to-test assumptions. The test-negative design for estimating influenza vaccine effectiveness (Vaccine 2013;31(17):2165-8) explains the rationale, assumptions and analysis of this design, originally developed for influenza vaccines. Study subjects were all persons seeking care for an acute respiratory illness, and influenza VE was estimated from the ratio of the odds of vaccination among subjects testing positive for influenza to the odds of vaccination among subject testing negative. Test-Negative Designs: Differences and Commonalities with Other Case-Control Studies with "Other Patient" Controls (Epidemiology. 2019 Nov;30(6):838-44) discusses advantages and disadvantages of the design in various circumstances. The use of test-negative controls to monitor vaccine effectiveness: a systematic review of methodology (Epidemiology 2020;31(1):43-64) discusses challenges of this design for various vaccines and pathogens, also providing a list of recommendations.

In Effectiveness of rotavirus vaccines in preventing cases and hospitalizations due to rotavirus gastroenteritis in Navarre, Spain (Vaccine 2012;30(3):539-43), electronic clinical reports were used to select cases (children with confirmed rotavirus infection) and test-negative controls (children who tested negative for rotavirus in all samples), under the assumption that the rate of gastroenteritis caused by pathogens other than rotavirus is the same in both vaccinated and unvaccinated subjects. A limitation is sensitivity of the laboratory test, which may underestimate vaccine effectiveness. In addition, if the viral type is not available, it is not possible to study the association between vaccine failure and a possible mismatch between vaccine strains and circulating strains. These learnings still apply today in the context of COVId-19 vaccines.

The article Theoretical basis of the test-negative study design for assessment of influenza vaccine effectiveness (Am J Epidemiol. 2016;184(5):345-53; see also the related Comments) uses directed acyclic graphs to characterise potential biases and shows how they can be avoided or minimised. In Estimands and Estimation of COVID-19 Vaccine Effectiveness Under the Test-Negative Design: Connections to Causal Inference (Epidemiology 2022;33(3):325-33), an unbiased estimator for vaccine effectiveness using the test-negative design is proposed under the scenario of different vaccine effectiveness estimates across patient subgroups.

In the multicentre study in 18 hospitals 2012/13 influenza vaccine effectiveness against hospitalised influenza A(H1N1)pdm09, A(H3N2) and B: estimates from a European network of hospitals (EuroSurveill 2015;20(2):pii=21011), influenza VE was estimated based on the assumption that confounding due to health-seeking behaviour is minimised since all individuals needing hospitalisation are likely to be hospitalised.

Postlicensure Evaluation of COVID-19 Vaccines (JAMA. 2020;324(19):1939-40) describes methodological challenges of the test-negative design applied to COVID-19 vaccines and discusses solutions to minimise bias. Covid-19 Vaccine Effectiveness and the Test-Negative Design (N Engl J Med. 2021;385(15):1431-33) uses the example of a published study in a large hospital network to provide considerations on how to report findings and assess their sensitivity to biases specific to the test-negative design. The study Effectiveness of the Pfizer-BioNTech and Oxford-AstraZeneca vaccines on covid-19 related symptoms, hospital admissions, and mortality in older adults in England: test negative case-control study (BMJ 2021;373:n1088) linked routine community testing and vaccination data to estimate effectiveness against confirmed symptomatic infection, COVID-19 related hospital admissions and case fatality, and estimated the odds ratios for testing positive to SARS-CoV-2 in vaccinated compared to unvaccinated subjects with compatible symptoms. The study also provides considerations on strengths and limitations of the test-negative design.

Case-population, case-coverage, and screening methods

These methods are related, and all include, to some extent, an ecological component such as vaccine coverage or epidemiological surveillance data at population level. Terms to refer to these designs are sometimes used interchangeably. The case-coverage design is discussed above in paragraph 16.2.2.2. Case-population studies are described in Chapter 4.2.5 and in Vaccine Case-Population: A New Method for Vaccine Safety Surveillance (Drug Saf. 2016;39(12):1197-209).

The screening method estimates vaccine effectiveness by comparing vaccination coverage in positive (usually laboratory confirmed) cases of a disease (e.g., influenza) with the vaccination coverage in the population from which the cases are derived (e.g., in the same age group). If representative data on cases and vaccination coverage are available, it can provide an inexpensive and rapid method to provide early estimates or identify changes in effectiveness over time. However, Application of the screening method to monitor influenza vaccine effectiveness among the elderly in Germany (BMC Infect Dis. 2015;15(1):137) emphasises that accurate and age-specific vaccine coverage data are crucial to provide valid estimates. Since adjusting for important confounders and assessing product-specific effectiveness is generally challenging, this method should be considered mainly as a supplementary tool to assess crude effectiveness. COVID-19 vaccine effectiveness estimation using the screening method – operational tool for countries (2022) also provides a good introduction to the method and its strengths and limitations.

Indirect cohort (Broome) method

The indirect cohort method is a case-control type design which uses cases caused by non-vaccine serotypes as controls, and uses surveillance data, instead of vaccination coverage data. Use of surveillance data to estimate the effectiveness of the 7-valent conjugate pneumococcal vaccine in children less than 5 years of age over a 9 year period (Vaccine 2012;30(27):4067-72) evaluated the effectiveness of a pneumococcal conjugate vaccine against invasive pneumococcal disease and compared to the results of a standard case-control design conducted during the same time period. The authors consider the method most useful shortly after vaccine introduction, and less useful in a setting of very high vaccine coverage and fewer cases. Using the indirect cohort design to estimate the effectiveness of the seven valent pneumococcal conjugate vaccine in England and Wales (PLoS One 2011;6(12):e28435) and Effectiveness of the seven-valent and thirteen-valent pneumococcal conjugate vaccines in England: The indirect cohort design, 2006-2018 (Vaccine 2019;37(32):4491-98) describe how the method was used to estimate effectiveness of various vaccine schedules as well as for each vaccine serotype.

Density case-control design

Effectiveness of live-attenuated Japanese encephalitis vaccine (SA14-14-2): a case-control study (Lancet 1996;347(9015):1583-6) describes a case-control study of incident cases in which the control group consisted of all village-matched children of a given age who were at risk of developing disease at the time that the case occurred (density sampling). The effect measured is an incidence density rate ratio. In Vaccine Effectiveness of Polysaccharide Vaccines Against Clinical Meningitis - Niamey, Niger, June 2015 (PLoS Curr. 2016;8), a case-control study compared the odds of vaccination among suspected meningitis cases to controls enrolled in a vaccine coverage survey performed at the end of the epidemic. A simulated density case-control design randomly attributing recruitment dates to controls based on case dates of onset was used to compute vaccine effectiveness. In Surveillance of COVID-19 vaccine effectiveness: a real-time case–control study in southern Sweden (Epidemiol Infect. 2022;150:1-15) a continuous density case-control sampling was performed, with the control group randomly selected from the complete study cohort as individuals without a positive test the same week as the case or 12 weeks prior.

Waning immunity

Studying how immunity conferred by vaccination wanes over time requires consideration of within-host dynamics of the pathogen and immune system, as well as the associated population-level transmission dynamics. Implications of vaccination and waning immunity (Proc Biol Sci. 2009; 276(1664):2071-80) combined immunological and epidemiological models of measles infection to examine the interplay between disease incidence, waning immunity and boosting.

Global Varicella Vaccine Effectiveness: A Meta-analysis (Pediatrics 2016; 137(3):e20153741) highlights the challenges to reliably measure effectiveness when some confounders cannot be controlled for, force of infection may be high, degree of exposure in study participants may be variable, and data may originate from settings where there is evidence of vaccine failure. Several estimates or studies may therefore be needed to accurately conclude in waning immunity. Duration of effectiveness of vaccines against SARS-CoV-2 infection and COVID-19 disease: results of a systematic review and meta-regression (Lancet 2022;399(10328):924-944) reviews evidence of changes in efficacy or effectiveness with time since full vaccination for various clinical outcomes; biases in evaluating changes in effectiveness over time, and how to minimise them, are presented in a tabular format. Effectiveness of Covid-19 Vaccines over a 9-Month Period in North Carolina (N Engl J Med. 2022;386(10):933-941) linked COVID-19 surveillance and vaccination data to estimate reduction in current risks of infection, hospitalisation and death as a function of time elapsed since vaccination, and demonstrated durable effectiveness against hospitalisation and death while waning protection against infection over time was shown to be due to both declining immunity and emergence of the delta variant.